Historically, technology and modernization have vastly influenced the way we live and work. Notwithstanding, cognitive assessment measures remain similar to what they were several years ago. Although the area has advanced in the last decade, even the digitally developed tools have yet to be sufficiently explored. Among these measures, we can define computer-based/digital instruments as those that use the computer interface or other digital devices in their administration, scoring or interpretation (Kane & Parsons, 2017; Parsey & Schmitter-Edgecombe, 2013).

Computerized testing guarantees advantages, such as more motivating tasks, greater standardization in the application, automated scoring and immediate feedback. Thus, many studies are focused on analyzing the comparability between digital and traditional forms of evaluation. The development itself of computerized testing aimed for this greater efficiency (less expeditious applications, adaptive possibilities and broader reach), as well as for better psychometric properties (Csapó, Molnár & Nagy, 2014; Moncaleano & Russell, 2018; Zygouris & Tsolaki, 2015).

Conversely, possible disadvantages of said tools must also be considered, including the following: the level of the examinee's familiarity with digital devices, which may influence their performance; discrepancy between data generated on different platforms due to hardware and operating systems; possible reduction of direct contact between examinee and evaluator; higher potential for misuse, among others (Bauer et al., 2012; Lumsden, Edwards, Lawrence, Coyle & Munafò, 2016; Zygouris & Tsolaki, 2015).

Another aspect to be examined is the adaptation itself of the paper-and-pencil tests to the digital environment. New psychometric studies must be done taking into account the possibility of disparity between the two versions properties. The diverse effects caused by a digital testing environment must also be contemplated owing to distinct groups reacting differently to the platform. Individuals with ADHD (Attention Deficit / Hyperactivity Disorder), for example, do not react as healthy controls do to highly motivating tasks, which may affect the outcome on computer-based measures (Csapó et al., 2014; Kane & Parsons, 2017; Lumsden et al., 2016).

In order to better comprehend the differences, one needs to study ADHD itself. This disorder is understood as a complex disorder of neurobiological development, which symptoms may present in the preschool years and extend into adulthood. According to the Diagnostic and Statistical Manual of Mental Disorders 5th edition (DSM-5; American Psychiatric Association (ADA), 2013), ADHD is characterized by persistent symptoms of inattention and/or hyperactivity-impulsivity. It can lead to personal, social, academic and professional losses, as along with worse performance in tasks that require attention and executive functions (EF). ADHD is a common disorder in childhood, with an estimated prevalence of 5.3 % among children and adolescents (Mahone, 2012; Willcutt, 2012).

Different models are proposed to comprehend the cognitive profile of individuals with ADHD. Among them, there is Barkley's that describes a hierarchical relationship in which a central deficit in response inhibition would lead to secondary impairments in other executive functions, such as self-regulation, working memory, discourse internalization and reconstitution of experiences. The aforementioned damages would then lead to decreased control of motor behavior, rendering the individual inept to environmental demands. Sergeant argues that these deficits may not be specific to ADHD, but they are possibly related to cognitive-energy dysfunctions. Sonuga-Barke and Halperin, however, attribute the disorder's cognitive heterogeneity to the multiple developmental pathways, associated with inhibitory control, reward mechanisms and temporal perception, in which different deficits may be complementary (Barkley, 1997; Sergeant, 2005; Sonuga-Barke & Halperin, 2010).

A unique cognitive model that fully explains the clinical phenotype of ADHD is yet to be described. Nonetheless, the literature has consistently demonstrated the association between the disorder and deficits in various cognitive functions, with emphasis on inhibitory control, working memory, sustained attention and processing speed (Delgado et al., 2012; Messina & Tiedemann, 2009; Rueda & Muniz, 2012; Willcutt, Doyle, Nigg, Faraone & Pennington, 2005).

Despite the association between ADHD and worse performance in various cognitive functions at the individual level, these factors are not sensitive enough nor are the tests sufficient for a diagnostic, which is done using clinical assessment. However, considering ADHD's cognitive heterogeneity, it is of utmost importance to carry out a comprehensive and cognitive evaluation in order to draw up a treatment plan fit for each individual. To do so, it is necessary to identify appropriate tools for the evaluation of individuals with such disorder. Another aspect to be considered is whether the attractiveness and similarities to games of computer-based testing enables the release of dopamine, improving attention levels, thus masking attention deficit (Dovis, Van der Oord, Wiers & Prins, 2011; Lumsden et al., 2016).

Among the computer-based measures currently being used in evaluations for children with ADHD, there are CPTs (Continuous Performance Tests) and cognitive assessment batteries such as CANTAB (Cambridge Neuropsychological Test Automated Battery). Nonetheless, the comparison between digital and paper-and-pencil measures, as well as possible impacts of this format to the cognitive evaluation of people with ADHD, is still underexplored, lacking studies especially in the Brazilian scenario. The disorder is also present in a significant part of the population, causing broad damages to those individuals, and establishing, therefore, an urgent demand for the aforementioned studies (Fried, Hirshfeld-Becker, Petty, Batchelder & Biederman, 2012; Wang et al., 2011).

This study's objective was to assess the impact of a test digital format on evaluating cognitive functions of children with ADHD symptoms, along with the differential impacts in comparison to the typically developed groups within different-format tasks. In addition to that, the study also aimed to verify whether there was a significant difference between effect sizes on computer-based and alike paper-and-pencil tests on the aforementioned groups. Among the hypothesis we expect significant differences between the groups' scores. There is also the possibility that computer-based testing could be used for the clinical group, and that the impact of its platform would not be significantly different in comparison to the latter group.

Method

The present study is part of a larger research project developed in the Laboratory of Evaluation and Intervention in Health (LAVIS) of the Department of Psychology of UFMG (Universidade Federal de Minas Gerais), in partnership with the Center for Development of Technologies of Inclusion (CEDETI) of the Pontifical Catholic University of Chile (PUC-Chile), to do a cross-cultural adaptation and validation of TENI (Test of Infant Neuropsychological Evaluation) for Brazil. The study was approved by UFMG's Research Ethics Committee under CAAE (51216815.9.0000.5149).

Participants

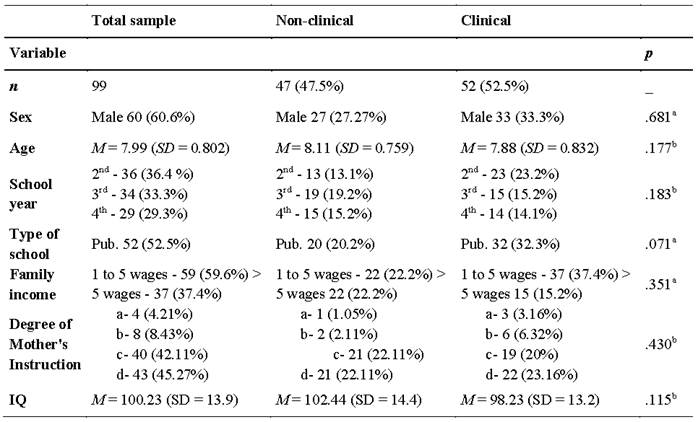

The sample of the present study was selected from 237 children in 2nd, 3rd and 4th grade from public and private schools. It was calculated considering the total population of 92,358 children enrolled in the 2nd, 3rd and 4th grades of elementary school in the city of Belo Horizonte, the capital of the state of Minas Gerais (Brazil). The confidence level was 90 % and a sampling error of 5 %, considering that approximately 5 % of the children population would present ADHD. Thus, the indicated sample was 52 children with symptomatology compatible with ADHD, which was precisely the number of the final sample obtained in the study. Among those in the clinical group, 9 (17 %) were using psychopharmaceuticals to treat ADHD. These children had their participation previously authorized by their parents, who received and filled out a socioeconomic questionnaire and a scale of perceived inattention and hyperactivity symptoms (Swanson, Nolan & Pelham Scale Version IV - SNAP IV; Mattos, Serra-Pinheiro, Rohde & Pinto, 2006).

With the provided information, 55 children with a clinical score (as defined by Costa, de Paula, Malloy-Diniz, Romano-Silva & Miranda, 2018) of 1.72 for inattention and 1.17 for hyperactivity in SNAP-IV were selected, three of them were excluded from the sample by reason of intellectual disability or intelligence quotients below 70. The 47 members of the control group were then selected so that there was no significant difference in age, sex, school type or grade to the clinical one (sample pairing). Table 1 presents descriptive and comparative data of the clinical and control groups, including their characterization.

Table 1: Characterization of the clinical and non-clinical sample, according to age, school type, sex, maternal schooling, family income, IQ and school year (n = 99)

Notes: M = Mean; SD = Standard Deviation; Pub = Participants from public schools. Mothers or caretaker’s degree of education: A = illiterate to incomplete elementary school; B = complete elementary school to incomplete secondary education; C = complete high school to incomplete university; D = complete higher education. a = Pearsons Chi-Squared Test; b = Mann-Whitney U test; IQ = intelligence quotient. All p > .05 demonstrating that the difference between the groups were not significant.

Materials & Instruments

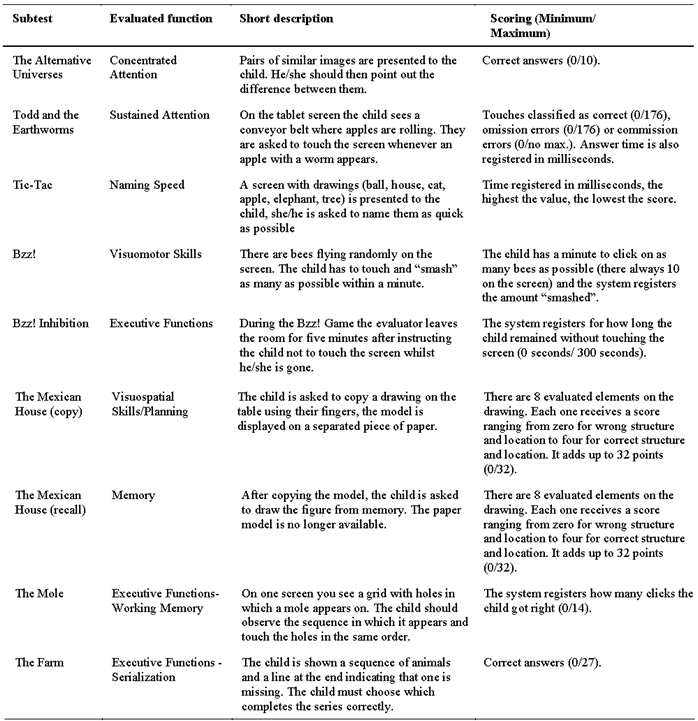

Neuropsychological Evaluation of Children - TENI

TENI is a computer-based cognitive assessment battery for children aged 3 to 9 years developed by CEDETI of PUC-Chile. It is on tablets and has nine subtests, which evaluate various cognitive constructs ensuring a broad examination in a short period of time. All subtests were created as games with an attractive and user-friendly interface. Its Chilean version has good psychometric properties and presents high evidence of validity and reliability (Cronbach's alpha between .8 and .9). Further analysis was done by specialists, calculating the correlations as well as using the test-retest and split halves methodology to evaluate the tool’s reliability (Delgado et al., 2012). The Brazilian cross-cultural adaptation and validation was done with a sample of typically developed children from Minas Gerais. The factor analysis indicated acceptable and significant results. The intercorrelation calculated between subtests evidenced a convergent and discriminant validity along with significant and strong correlation among subtests that assessed similar constructs. Moreover, tasks that evaluated theoretically distinct constructs pointed to divergence. The subtests used in the present study are described in Table 2 (Martins, Barbosa-Pereira, Valgas-Costa & Mansur-Alves, 2020).

Wechsler Abbreviated Intelligence Scale-WASI

WASI is a brief assessment of intelligence suitable for individual applications in clinical and research settings. The instrument is composed of four subtests (vocabulary, block design, similarities and matrix reasoning), and the IQ, however, can be calculated with only two subtests (vocabulary and matrix reasoning). The tool has a high reliability index, with Cronbach's alpha ranging from .82 to .92 on the subtests (Trentini, Yates & Heck, 2014).

Rey's Complex Figure

It evaluates the neuropsychological functions of visual perception and immediate memory. Additionally, it is widely used to investigate issues related to the actions planning and execution beyond visual memory. Its psychometric studies evidenced a high internal consistency, with Cronbach's alpha estimated at .89 for copy and .83 for recall (Oliveira & Rigoni, 2010).

Corsi Block-Tapping Test

It evaluates visuospatial short-term memory and its executive function component, when there is inversion of the items, and the task requires the use of working memory. The test consists of a tray with randomly arranged blocks which the examinee must tap on according to the sequence previously showed by the examiner, firstly in a direct order and then inversely. Although it has not been adapted for the Brazilian population, international studies have shown a linear increase in test performance conforming to age (Corsi, 1972).

Nine Hole Peg Test

It is a measure of finger dexterity, fine manual speed and visual motor coordination that can also be useful for the motor evaluation of different clinical groups (e.g., patients with cerebral palsy, Parkinson's disease and multiple sclerosis). Studies using the tool demonstrated a progressive increase in the manual dexterity alongside age (Kane & Parsons, 2017; Kellor, Frost, Silberberg, Iversen & Cummings, 1971; Willcutt, 2012).

Rapid Naming Task

Also known as Rapid Automatized Naming (RAN), it is a screening task that evaluates one of the precursors of reading. The person must sequentially, quickly and successively recall the name of distinct symbols previously introduced by the evaluator (Capellini, Smythe & Silva, 2017).

Five Digit Test (FDT)

FDT is a measure of nuclear executive functions, such as inhibitory control and cognitive flexibility, as well as simple attentional processes, including reading and counting. The stimuli used is meagerly influenced by formal schooling and social differences. The instrument has good internal consistency and a Guttman coefficient above .90 (Sedó, de Paula & Malloy-Diniz, 2015).

BPA - Psychological Battery for Attention Evaluation

It is a comprehensive evaluation used to assess one's attention and its components, i.e., sustained, divided and alternating attention. The stimuli used (both target and distractor) are abstract shapes, which minimizes the impact of schooling on performance. The General Attention level (AG) is calculated upon completion using the sum of the three subtests. The accuracy was verified using test-retest, and the correlations between applications ranged from r = .68 to .89, p < .05 (Rueda, 2013).

SNAP-IV - Swanson, Nolan, and Pelham, Version IV

SNAP-IV is a public domain questionnaire used to assess the Attention Deficit Disorder and Hyperactivity Disorders (ADHD); the instrument was based on the diagnostic criteria for ADHD established by the DSM-IV. The tool has evidence of validity and reliability with an exploratory and confirmatory factor analysis suggesting an adequate adjustment to the structure of the questionnaire factors. The coefficients of internal consistency were high, with Cronbach's alpha of .94 for inattention and .92 for hyperactivity. Other than that, using a ROC curve analysis, a cutoff score was established, providing balance between sensitivity and specificity. Using the mean score, the cutoff for inattention was 1.72 (AUC=0.877, sensitivity of 0.79 and specificity of 0.81) and 1.17 for hyperactivity (AUC=0.788, sensitivity of 0.70 and specificity of 0.73) (Costa et al., 2018; Mattos et al., 2006).

Socioeconomic Questionnaire

The questionnaire was created for the TENI validation studies, and it had to be filled out by the guardians of the participating children. The questions included topics on the family monthly income, parents' educational level, number of people dependent on said income, neighborhood safety issues, child ethnicity, psychiatric or neurological diagnoses, and medications, among other social and economic indicators.

Procedures

The study was conducted through a partnership with three schools and a reference center for inclusive education. Furthermore, the institutions signed a Consent Form and were informed of all the processes. The students were then invited to participate through the Invitation Letter sent to the guardians. An Informed Consent Form (TCLE, in Portuguese), a socioeconomic questionnaire and a copy of SNAP-IV were also sent along with the letter. Besides the consent of those responsible, the children's assent was requested during the first interview.

The evaluations were carried out by a team of research assistants and psychology professionals during the school year of 2018. The assessments were individual and occurred in two meetings with each child, lasting approximately 70 minutes each. Upon completion and correction of each form, the parents of each participant received a feedback letter describing the child's performance on the tasks. The partner institutions also received feedback through letters with the overall presentation of the participants' results.

Results

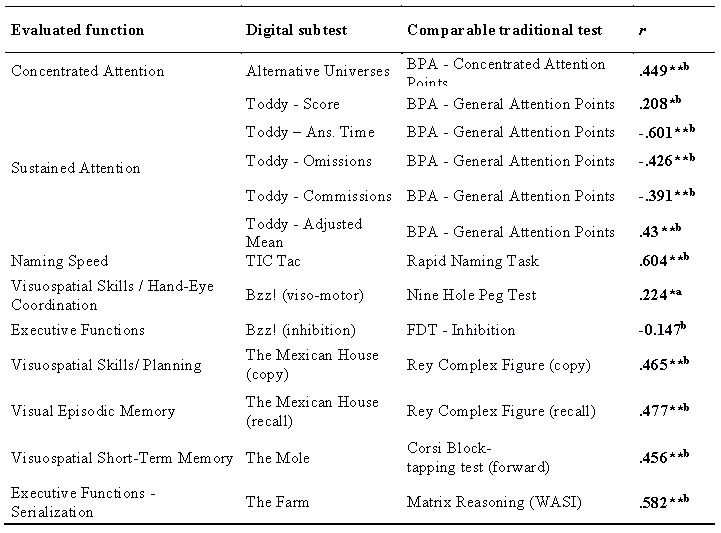

Correlations between cognitive subtests in paper-and-pencil and computer-based tools

There was a verified correlation between the participants' performance in both digital and traditional tasks that evaluated similar constructs. As shown in Table 3, the correlations were overall significant with moderate magnitude. Nonetheless, there was a low correlation between the sustained attention digital task (Toddy) and BPA's general attention score. The visuomotor skills evaluation also displayed a low correlation when analyzing the traditional and digital measures to the tasks that required manual dexterity (Nine Hole and Bzz! Visuomotor). Finally, the only correlation that was not statistically significant occurred between the inhibition items on the digital task of BZZ! (gratification delay) and on the FDT (which linked an automated response through a change in environmental demand).

Table 3: Correlations between digital and traditional tasks

Notes: a Pearson correlation coefficient. b Spearman Correlation Coefficient. * Significant correlation with p < .05 (two-tailed). ** Significant correlation with p < .01 (two-tailed).

Performance comparison between clinical and nonclinical groups in digital and traditional measures

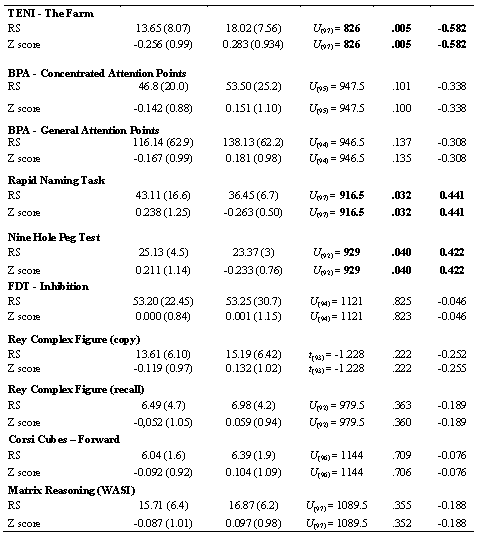

We compared averages between computer-based and traditional tasks using both raw scores and standardized Z scores on clinical and nonclinical groups. Table 4 T4a presents the averages and deviations (independent T-test or Mann-Whitney on variables with non-normal distribution), and the effect size for the difference.

The children in the clinical group presented significantly worse performances in all the tasks and aspects within Toddy, as well as in the Mexican's House Copy, Bzz! Inhibition and The Farm. On the traditional tests, the control group showed significantly better results on the rapid naming task and on the Nine Hole Peg Test.

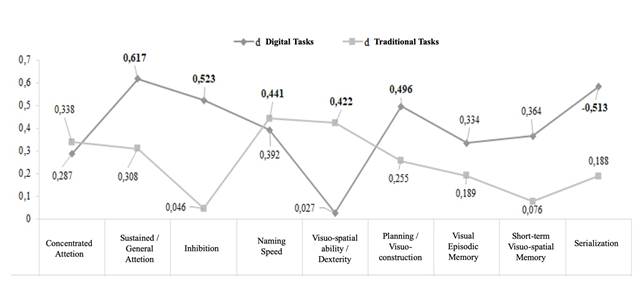

Graph 1 shows the values referring to the group comparisons effect size, with the statically significant ones being in bold. The computer-based versions of the sustained attention, visuospatial ability/planning, and serialization tests, all showed significant differences in performance when comparing both groups, and their effect sizes were also superior in comparison to the traditional measures. A larger effect size was also perceived on the digital tasks that evaluated visual episodic memory and visuospatial short-term memory contrasting with their paper-and-pencil counterparts, although the mean difference was not significant. The traditional measures of manual dexterity and rapid naming presented greater effects than their digital correspondents. The same situation occurred with the paper-and-pencil concentrated attention test; however, for this test, the differences between the groups were not significant.

Notes: RS = Raw Score; Z score = Scoring converted to z score controlling age; M = Mean; SD = Standard Deviation; Df = Degree of freedom; p = Statistical significance; d = Effect size; U = Results of the non-parametric Mann-Whitney test; t = Test result; T = independent samples. Negative d value indicates higher result for the nonclinical group, since the means of the clinical group were included in the first formula

Within groups comparison of digital and traditional tests

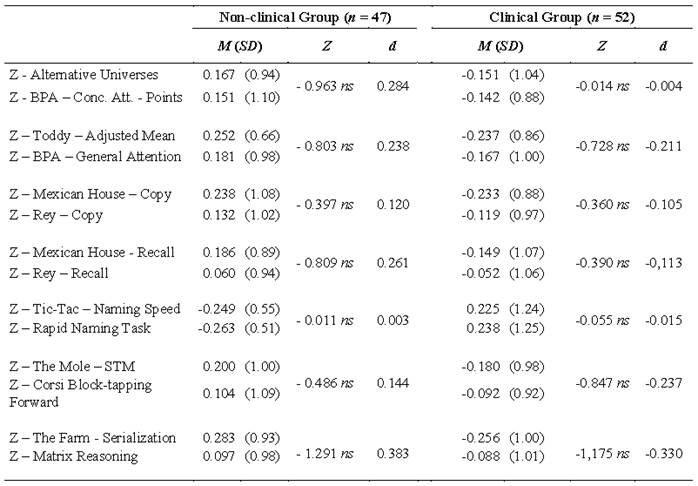

In order to identify whether the use of digital tests generates results significantly different from those found in traditional tests for children who present symptoms of inattention and hyperactivity, the averages obtained in both forms of application were compared with the use of the Wilcoxon test (nonparametric equivalent to the dependent T-test), which is indicated for comparison in repeated measurements. Both the standardized scores (Z score) of the digital and the traditional tests were used, according to the evaluated constructs, and they resulted on positive correlations.

Table 5 shows that there were no significant differences in performance when comparing the results within the groups according to their computer-based and paper-and-pencil measures scores, which also resulted on small effect sizes. Yet, children in the clinical group showed better performance in traditional tasks, while the nonclinical group showed better results in digital tasks (except on the rapid naming task which has an opposite result pattern). Overall, the effects are small for both groups, being smaller on the clinic one.

Table 5: Intragroup comparison of means in traditional and digital tests

Notes: Results related to Wilcoxon signal testing appropriate for dependent samples. The similar digital and traditional standardized tests were grouped by transformation into Z score. Ns = Non-significant value with p > .05. Negative d value indicates highest result in the traditional test, since the digital tests were included in the first formula. STM = short-term memory. The digital tests entered as time 1 and the traditional ones as time 2.

Discussion

The use of digital resources to aid on the process of cognitive functions evaluation is already a reality, mainly abroad, showcasing several studies that use computerized tests as tools in their investigations. Still, it is important to verify the possible impacts that digital measures can cause to the evaluation, in addition to pointing out that such impacts may differ on distinct samples, for instance, children with ADHD that may be impacted differently from those with typical development (Lumsden et al., 2016).

Thus, the present study aimed to verify the impact of computer-based tests on the evaluation of cognitive functions in children with ADHD symptoms. In order to do that, the performance in digital and traditional tasks were compared in a sample of 52 children with clinical ADHD symptoms and 47 children with fewer symptoms. As socioeconomic factors influence children's neurocognitive development, the groups were selected so as not to have significant differences regarding the type of school (public and private), family income or maternal education level. The groups also did not present significant differences in age, school year, sex and IQ (Ursache & Noble, 2015).

The study found that digital and traditional tests that evaluate the same functions generate similar results. There were significant correlations in measures that assess the same construct, such as short-term visuospatial memory, planning ability, naming speed, visual episodic memory and reasoning. The correlations ranged from low to high, indicating comparability between digital and traditional tests. Similar results are described in the systematic review by Lumsden et al. (2016), who reported that most of the cognitive tests using technology and gaming features presented correlations between computer-based and traditional tests with values ranging from r = .45 to r = .60.

In the current study, besides having significant correlations between tests that evaluated the same constructs, the correlations were higher for tasks with greater similarity in the stimuli and forms of application. Both the traditional and digital versions of the rapid naming speed, for example, required the participant to name the figures that appeared on the screen/paper as fast as possible; on account of that similarity, the correlations reached r = .717 (p < .01). Yet, the tests involving manual dexterity, in which the stimuli and application were distinct, and that scored differently (one per number of hits and the other per run time), presented low correlations such as r = -.242 (p < .05).

Whilst searching for similar computer-based and paper-and-pencil tests, there was an attempt to use comparable tools. Nevertheless, there were not many traditional instruments validated for Brazilian children within the selected age group. For instance, the digital sustained attention task (Toddy) was compared to the BPA's overall scores for a lack of better options. Although the evaluated construct is not exactly the same, in both tests, the participant should remain attentive for an extended period of time. While the digital one lasts seven uninterrupted minutes, the traditional battery consists of three tasks in a row, lasting around eight and a half minutes with brief interruptions between them. In general, the two measures presented significant and moderate correlations.

Another comparison between tests assessing distinct facets of related constructs was performed between the inhibition tasks (Bzz! and FDT Inhibition). According to Diamond (2013), inhibitory control is a function that involves the ability to do what is necessary, by controlling the attention, thoughts, emotions and behaviors to override a strong internal predisposition. One aspect of this ability is self-control, which involves restraining emotions and impulsiveness. The other, in turn, is the inhibitory control of attention, related to the interference regulation in perception, which allows to selectively respond to some stimuli and suppress others. This function works under the working memory, usually relating more to it than to other inhibitory aspects. As a result, it was not surprising to see the not significant correlations between the Bzz! and FDT tasks, since one was closely associated to the attentional aspects of inhibition, whilst the other evaluated the affective facet.

The present study also verified the performance differences between the clinical and non-clinical groups in digital and traditional tests that evaluated cognitive functions; and, in order to analyze them, we compared the means on both formats. Children with ADHD symptoms had worse mean performance on all measures, with significant differences mainly on the digital ones. Significant differences were also observed in the digital tests of sustained attention, inhibition, seriation and visuomotor/planning ability, with effect sizes between d = 0.43 and d = 0.61, effects greater than their traditional pairs. On the paper-and-pencil tools, there were significant differences on the tasks of manual dexterity and rapid naming, with effects sizes of d = 0.42 for the former and d = 0.44 for the latter.

Searching for significant differences between typically developed and ADHD children's performance is common in the literature, and the results are often variable. In a meta-analysis, Willcutt et al. (2005) considered 83 articles that investigated executive functions in patients with ADHD and showed that in only 65 % of the studies significant differences were reported. In those studies, the clinical group presented significantly worse performance than the control, with effect sizes between d = 0.43 and d = 0.69 on different functions. Among the analyzed tasks, there were CPTs, Rey's Complex Figure (Copy) and working memory tests. The authors report that studies using standardized cut-off points for group definition, rather than clinical diagnosis, also found significant differences in cognitive performance, but with smaller effect sizes (d = 0.41 ± 0.16).

The aforementioned meta-analysis was used by Fried et al. (2012) to compare the effects sizes obtained by the difference in the performance of groups with ADHD and control on CANTAB and paper-and-pencil tests. These authors mention that the use of CANTAB generated results similar to those reported by Willcutt, but with slightly lower effects, variating between d = 0.39 and d = 0.63. The present study had results compatible with those presented on both articles, for instance, the Mexican House (Copy) had an effect size of d = 0.49, while the CANTAB planning task had a d = 0.41, and the Complex Rey Figure Copy had a d = 0.43. TENI's sustained attention task had a similar effect size to other studies that involved CPTs. The meta-analytic data presented effect sizes of d = 0.51 and d = 0.64 for the errors by default and commission, respectively, while TENI's were d = 0.44 and d = 0.51. Toddy's task response time is based on continuous performance models for sustained attention, and its effect size (d = 0.54) was similar to those of CANTAB (d = 0.39), ClinicaVR: Classroom-CPT-VC (d = 0.59) and the meta-analytic data (d = 0.61) (Fried et al., 2012).

In this study, there were significant differences between groups when using the traditional rapid naming task (d = 0.44). Similarly, the study by Bidwell, Willcutt, DeFries & Pennington (2007), which had 266 children with ADHD and 332 with typical development, reported that the clinical group presented significantly worse performance on the RAN, although the effect size was d = 0.71. On the traditional dexterity test, the clinical group had worse performance (d = 0.42), which is consistent with national studies, including the one conducted by Oliveira, Cavalcante and Palmares (2018). Their research reported that 43.38 % of children with ADHD had major dexterity losses, as well as aiming and balance difficulties, which were absent on the control group.

Therefore, even though significant differences were observed on the group's performance in digital tasks regarding executive functions (planning, reasoning and inhibition), sustained attention, naming speed and visuomotor ability, these differences were not statistically evidenced throughout all the paper-and-pencil tests that composed the evaluation battery. However, Willcutt et al. (2005) show that many studies do not report statistically significant differences in ADHD and comparison groups. Moreover, Raiford, Drozdick & Zhang (2015) state that although the effect size may vary between studies that rely on different samples of participants with ADHD, the expected outcome pattern remains the same. This pattern, in which groups with symptoms of inattention and hyperactivity show worse performances in certain cognitive functions, was perceived in both digital and traditional tests, although the differences were not always significant.

According to Raiford et al. (2015) the impact of cognitive tests computerized administration for individuals with specific clinical conditions is not broadly investigated. Therefore, this study also aimed to verify whether the digital format of cognitive tests generated differential impact when comparing children of the clinical with nonclinical groups. Lumsden et al. (2016) state that many digital tests use the gaming format to evaluate and/or train cognition. Due to its attractiveness, some are target at specific clinical groups such as ADHD. Still, they also ponder the fact that by providing a structured, feedback-rich environment, it is possible that the symptoms of inattention and hyperactivity are minimized and scarcely detected. In this case, inattentive and hyperactive people would be expected to perform better on gaming-format tasks. Thus, establishing the need to further investigate whether or not tests turned into games could invalidate the evaluation, taking into consideration that if the tools mask the deficit that they intend to measure, they could generate a differential influence on groups such as those with ADHD in comparison to typical developed children.

To further understand what kind of influence the alteration of the testing environment could have on the groups' performance, the standardized scores of both types of evaluation were compared using paired sample analysis. There were no statistically significant differences between performance in digital and traditional tests, neither on the group composed of children with symptoms of inattention and hyperactivity, nor for the comparison group. It should be noted that all effect sizes were small (d between -0.004 and 0.383).

Although the differences found were not significant, there was a differential pattern between groups. In general, the clinical group presented better performance on the traditional tasks, while the nonclinical group had better averages on the digital counterparts. This pattern is consistent with the fact that only computer-based tasks generated significant differences between groups, the exception being the RAN. Hence, contrary to what was previously reported by Lumsden et al. (2016), despite the use of a digital game-like task, children with ADHD symptoms did not have their performance benefited by the platform. However, the differential pattern may be due to the lack of isomorphism between the tasks, as well as distinction of the evaluated constructs.

The differential pattern, however, may be due to the lack of isomorphism between the tasks, which may even be evaluating disparate constructs. As an example, on the inhibition tasks the constructs are different, because the FDT evaluates the attentional inhibitory control while Bzz! measures self-control. Another case was on Todd, that assessed sustained attention, a known compromised ability on ADHD individuals, while the BPA evaluated alternating and divided attention which are not as affected on this group (Frazier, Demaree & Youngstrom, 2004; Nigg, 2005).

In summary, the present study shows evidences that the digital format did not cause losses in the evaluation of children with ADHD symptoms. Both the computer-based and the traditional tests generated similar results, especially on alike tasks. As expected, children with symptoms of inattention and hyperactivity at the clinical level demonstrate significantly worse performance on digital tasks that assess constructs typically reported as deficient in ADHD. Intragroup assessments have shown that children with typical development usually perform better on digital tests, while those with ADHD symptoms demonstrate an opposite pattern. However, it should be noted that such differences do not reach the level of statistical significance. Also, the minor differences may not be due to the test format but to the distinction between evaluated constructs. Therefore, it may be possible that the test format does not significantly impact distinct results on the studied groups.

Final considerations

This investigation contributes to the field indicating that digital tools may be useful for the evaluation of children with symptoms of ADHD. Additionally, the study also presents evidence that the differential impact was not significant between these children and the group without a clinical presentation of symptoms. Nevertheless, it is worth noting that this research had its limitations, some of which are: the use of a non-probabilistic sample, selected for convenience; not using a sample with clinical ADHD diagnostic use of only one source of information about the children's symptoms (only the parents' opinion was requested); no comorbidities were evaluated and controlled; difficulty in selecting traditional tests validated for the age group that evaluated the same constructs.

Further studies are required in order to address such limitations, with more extensive and diversified samples; along with a confirmation of the clinical diagnosis provided by specialized professionals, detailing the deficit's subtype. Another suggestion would be the comparison between tasks that evaluate other functions typically reported as deficit in ADHD, for example, working. Furthermore, comparisons could be made between TENI and different digital tests such as the Visual Attention Test (TAVIS) to verify whether there is a differential impact on clinical groups and controls in distinct measures (Duchesne & Mattos, 1997).

Curriculum ScienTI

Curriculum ScienTI