Servicios Personalizados

Revista

Articulo

Links relacionados

Compartir

CLEI Electronic Journal

versión On-line ISSN 0717-5000

CLEIej vol.18 no.3 Montevideo dic. 2015

Formal Analysis of Security Models for

Mobile Devices, Virtualization Platforms, and

Domain Name Systems

Abstract

In this work we investigate the security of security-critical applications, i.e. applications in which a failure may produce consequences that are unacceptable. We consider three areas: mobile devices, virtualization platforms, and domain name systems.

The Java Micro Edition platform defines the Mobile Information Device Profile (MIDP) to facilitate the development of applications for mobile devices, like cell phones and PDAs. We first study and compare formally several variants of the security model specified by MIDP to access sensitive resources of a mobile device.

Hypervisors allow multiple guest operating systems to run on shared hardware, and offer a compelling means of improving the security and the flexibility of software systems. In this work we present a formalization of an idealized model of a hypervisor. We establish (formally) that the hypervisor ensures strong isolation properties between the different operating systems, and guarantees that requests from guest operating systems are eventually attended. We show also that virtualized platforms are transparent, i.e. a guest operating system cannot distinguish whether it executes alone or together with other guest operating systems on the platform.

The Domain Name System Security Extensions (DNSSEC) is a suite of specifications that provides origin authentication and integrity assurance services for DNS data. We finally introduce a minimalistic specification of a DNSSEC model which provides the grounds needed to formally state and verify security properties concerning the chain of trust of the DNSSEC tree.

We develop all our formalizations in the Calculus of Inductive Constructions —formal language that combines a higher-order logic and a richly-typed functional programming language— using the Coq proof assistant.

Abstract in Spanish

En este trabajo investigamos la seguridad de aplicaciones de seguridad crticas, es decir aplicaciones en las cuales una falla podra producir consecuencias inaceptables. Consideramos tres reas: dispositivos mviles, plataformas de virtualizacin y sistemas de nombres de dominio. La plataforma Java Micro Edition define el Perfil para Dispositivos de Informacin Mviles (MIDP) para facilitar el desarrollo de aplicaciones para dispositivos mviles, como telfonos celulares y asistentes digitales personales. En este trabajo primero estudiamos y comparamos formalmente diversas variantes del modelo de seguridad especificado por MIDP para acceder a recursos sensibles de un dispositivo mvil. Los hipervisores permiten que mltiples sistemas operativos se ejecuten en un hardware compartido y ofrecen un medio para establecer mejoras de seguridad y flexibilidad de sistemas de software. En este trabajo formalizamos un modelo de hipervisor y establecemos (formalmente) que el hipervisor asegura propiedades de aislamiento entre los diferentes sistemas operativos de la plataforma, y que las solicitudes de estos sistemas son atendidas siempre. Demostramos tambin que las plataformas virtualizadas son transparentes, es decir, que un sistema operativo no puede distinguir si ejecuta slo en la plataforma o si lo hace junto con otros sistemas operativos. Las Extensiones de Seguridad para el Sistema de Nombres de Dominio (DNSSEC) constituyen un conjunto de especificaciones que proporcionan servicios de aseguramiento de autenticacin e integridad de origen de datos DNS. Finalmente, presentamos una especificacin minimalista de un modelo de DNSSEC que proporciona los fundamentos necesarios para formalmente establecer y verificar propiedades de seguridad relacionadas con la cadena de confianza del rbol de DNSSEC. Desarrollamos todas nuestras formalizaciones en el Clculo de Construcciones Inductivas –lenguaje formal que combina una lgica de orden superior y un lenguaje de programacin funcional tipado– utilizando el asistente de pruebas Coq.

Keywords: Formal modelling, Security properties, Coq proof assistant, JME-MIDP, Virtualization.

Keywords in Spanish: Modelos formales, Propiedades de seguridad, Asistente de pruebas Coq, JME-MIDP, Virtualizacin, DNSSEC.

Received: 2015-08-09 Revised: 2015-10-26 Accepted: 2015-10-29

1 Introduction

There are multiple definitions of the term safety-critical system. In particular, if a system failure could lead to consequences that are determined to be unacceptable, then the system is safety-critical. In essence, a system is safety-critical when we depend on it for our welfare. In this paper we provide a detailed account of the work presented in [1], where we have investigated the security of three areas of safety-critical applications:

- 1.

- mobile devices: with increasing capabilities in mobile devices (like cell phones and PDAs) and posterior consumer adoption, these devices have become an integral part of how people perform tasks in their works and personal lives. Unfortunately, the benefits of using mobile devices are sometimes counteracted by security risks;

- 2.

- virtualization platforms: hypervisors allow multiple operating systems to run in parallel on the same hardware, by ensuring isolation between their guest operating systems. In effect, hypervisors are increasingly used as a means to improve system flexibility and security; unfortunately, they are often vulnerable to attacks;

- 3.

- domain name systems: the domain name systems constitute distributed databases that provide support to a wide variety of network applications such as electronic mail, WWW, and remote login. The important and critical role of these systems in software systems and networks makes them a prime target for (formal) verification.

1.1 Security policies

In general, security (mainly) involves the combination of confidentiality, integrity and availability [2]. Confidentiality can be understood as the prevention of unauthorized disclosure of information; integrity, as the prevention of unauthorized modification of information; and availability, as the prevention of unauthorized withholding of information or resources [3]. However, this list is incomplete.

We distinguish between security models and security policies. The security model term could be interpreted as the representation of security systems confidentiality, integrity and availability requirements [4]. The more general usage of the term specifies a particular mechanism, such as access control, for enforcing (in particular) confidentiality, which has been adopted for computer security. Security issues arise in many different contexts; so many security models have been developed.

A security policy is a specification of the protection goals of a system. This specification determines the security properties that the system should possess. A security policy defines executions of a system that, for some reason, are considered unacceptable [5]. For instance, a security policy may affect:

- access control, and restrict the operations that can be performed on elements;

- availability, and restrict processes from denying others the use of a resource;

- information flow, and restrict what can be inferred about objects from observing system behavior.

To formulate a policy is necessary to describe the entities governed by the policy and set the rules that constitute the policy. This could be done informally in a natural language document. However, approaches based on natural languages fall short of providing the required security guarantees. Because of the ambiguity and imprecision of informal specification, it is difficult to verify the correctness of the system. To avoid these problems, formal security models are considered.

1.2 Security models

Security models play an important role in the design and evaluation of high assurance security systems. Their importance was already pointed out in the Anderson report [6]. The first model to appear was Bell-LaPadula [7], in 1973, in response to US Air Force concerns over the confidentiality of data in time-sharing mainframe systems.

In the last decades, some countries developed specific security evaluation standards [8]. This initiative opened the path to worldwide mutual recognition of security evaluation results. In this direction, new Common Criteria (CC) [9] was developed. CC defines seven levels of assurance for security systems (EAL 1–7). In order to obtain a higher assurance than EAL 4, developers require specification of a security model for security systems and verify security using formal methods. For example, the Gemalto and Trusted Logic companies obtained the highest level of certification (EAL 7) for their formalization of the security properties of the JavaCard platform [10, 11, 12], using the Coq proof assistant [13, 14].

State machines are a powerful tool used for modeling many aspects of computing systems. In particular, they can be employed to specify security models. The basic features of a state machine model are the concepts of state and state change. A state is a representation of the system under study at a given time, which should capture those system aspects relevant to our problem. Possible state transitions can be specified by a state transition function that defines the next state based on the current state and input. An output can also be produced.

If we want to analize a specific safety property of a system, like security, using a state machine model, we must first identify all the states that satisfy the property, then we can check if all transitions preserve this property. If this is the case and if the system starts in an initial state that has this property, then we can prove by induction that the property will always be fulfilled. Thus, state machines can be used to enforce a collection of security policies on a system.

1.3 Reasoning about implementations and models

The increasingly important role of critical components in software systems (as hypervisors in virtual platforms or access control mechanisms in mobile devices) make them a prime target for formal verification. Indeed, several projects have set out to formally verify the correctness of critical system implementations, e.g. [15, 16, 17].

Reasoning about implementations provides the ultimate guarantee that deployed critical systems provide the expected properties. There are however significant hurdles with this approach, especially if one focuses on proving security properties rather than functional correctness.

First, the complexity of formally proving non-trivial properties of implementations might be overwhelming in terms of the effort it requires. Worse, the technology for verifying some classes of security properties may be underdeveloped. Specifically, liveness properties are generally hard to prove, and there is currently no established method for verifying, using tools, security properties involving two system executions, a.k.a. 2-properties, for implementations, making their formal verification on large and complex programs exceedingly challenging. For instance, operating system isolation property is a 2-safety property [18, 19] that requires reasoning about two program executions.

Second, many implementation details are orthogonal to the security properties to be established, and may complicate reasoning without improving the understanding of the essential features for guaranteeing important properties. Thus, there is a need for complementary approaches where verification is performed on idealized models that abstract away from the specifics of any particular implementation, and yet provide a realistic setting in which to explore the security issues that pertain to the realm of those critical systems.

1.4 Formal analysis of security models for critical systems

In this section we introduce the research lines —in the three domains of safety-critical applications listed above— and contributions of the thesis [1], summarized in this paper.

1.4.1 Mobile devices

Today, even entry-level mobile devices (e.g. cell phones) have access to sensitive personal data, are subscribed to paid services and can establish connections with external entities. Users of such devices may, in addition, download and install applications from untrusted sites at their will. This flexibility comes at a cost, since any security breach may expose sensitive data, prevent the use of the device, or allow applications to perform actions that incur a charge for the user. It is therefore essential to provide an application security model that can be relied upon—the slightest vulnerability may imply huge losses due to the scale the technology has been deployed.

Java Micro Edition (JME) [20] is a version of the Java platform targeted at resource-constrained devices which comprises two kinds of components: configurations and profiles. A configuration is composed of a virtual machine and a set of APIs that provide the basic functionality for a particular category of devices. Profiles further determine the target technology by defining a set of higher level APIs built on top of an underlying configuration. This two-level architecture enhances portability and enables developers to deliver applications that run on a wide range of devices with similar capabilities. This work concerns the topmost level of the architecture which corresponds to the profile that defines the security model we formalize.

The Connected Limited Device Configuration (CLDC) [21] is a JME configuration designed for devices with slow processors, limited memory and intermittent connectivity. CLDC together with the Mobile Information Device Profile (MIDP) provides a complete JME runtime environment tailored for devices like cell phones and personal data assistants. MIDP defines an application life cycle, a security model and APIs that offer the functionality required by mobile applications, including networking, user interface, push activation and persistent local storage. Many mobile device manufacturers have adopted MIDP since the specification was made available. Nowadays, literally billions of MIDP enabled devices are deployed worldwide and the market acceptance of the specification is expected to continue to grow steadily.

The security model of MIDP has evolved since it was first introduced. In MIDP 1.0 [22] and MIDP 2.0 [23] the main goal is the protection of sensitive functions provided by the device. In MIDP 3.0 [24] the protection is extended to the resources of an application, which can be shared with other applications. Some of these features are incorporated in the Android security model [25, 26, 27] for mobile devices, as we will see in Section 2.5.

Contributions

The contributions of the work in this domain are manyfold:

- We describe a formal specification of the MIDP 2.0 security model that provides an abstraction of the state of a device and security-related events that allows us to reason about the security properties of the platform where the model is deployed;

- The security of the desktop edition of the Java platform (JSE) relies on two main modules: a Security Manager and an Access Controller. The Security Manager is responsible for enforcing a security policy declared to protect sensitive resources; it intercepts sensitive API calls and delivers permission and access requests to the Access Controller. The Access Controller determines whether the caller has the necessary rights to access resources. While in the case of the JSE platform, there exists a high-level specification of the Access Controller module (the basic mechamism is based on stack inspection [28]), no equivalent formal specification exists for JME. We illustrate the pertinence of the specification of the MIDP 2.0 security model that has been developed by specifying and proving the correctness of an access control module for the JME platform;

- The latest version of MIDP, MIDP 3.0, introduces a new dimension in the security model at the application level based on the concept of authorizations, allowing delegation of rights between applications. We extend the formal specification developed for MIDP 2.0 to incorporate the changes introduced in MIDP 3.0, and show that this extension is conservative, in the sense that it preserves the security properties we proved for the previous model;

- Besson, Duffay and Jensen [29] put forward an alternative access control model for mobile devices that generalizes the MIDP model by introducing permissions with multiplicites, extending the way permissions are granted by users and consumed by applications. One of the main outcomes of the work we report here is a general framework that sets up the basis for defining, analyzing and comparing access control models for mobile devices. In particular, this framework subsumes the security model of MIDP and several variations, including the model defined in [29].

1.4.2 Virtualization platforms

Hypervisors allow several operating systems to coexist on commodity hardware, and provide support for multiple applications to run seamlessly on the guest operating systems they manage. Moreover, hypervisors provide a means to guarantee that applications with different security policies can execute securely in parallel, by ensuring isolation between their guest operating systems. In effect, hypervisors are increasingly used as a means to improve system flexibility and security, and authors such as [30] already in 2007 predicted that their use would become ubiquitous in enterprise data centers and cloud computing.

The increasingly important role of hypervisors in software systems makes them a prime target for formal verification. Indeed, several projects have set out to formally verify the correctness of hypervisor implementations. One of the most prominent initiatives is the Microsoft Hyper-V verification project [15, 16, 31], which has made a number of impressive achievements towards the functional verification of the legacy implementation of the Hyper-V hypervisor, a large software component that combines C and assembly code (about 100 kLOC of C and 5kLOC of assembly). The overarching objective of the formal verification is to establish that a guest operating system cannot observe any difference between executing through the hypervisor or directly on the hardware. The other prominent initiative is the L4.verified project [17], which completed the formal verification of the seL4 microkernel, a general purpose operating system of the L4 family. The main thrust of the formal verification is to show that an implementation of the microkernel correctly refines an abstract specification. Subsequent machine-checked developments prove that seL4 enforces integrity, authority confinement [32] and intransitive non-interference [33]. The formalization does not model cache.

Reasoning about implementations provides the final guarantee that deployed hypervisors fulfill the expected properties. There are however, as mentioned previously, serious difficulties with this approach. For example, many implementation details are orthogonal to the security properties to be established, and may complicate reasoning without improving the understanding of the essential features for guaranteeing isolation among guest operating systems. Therefore, there is a need for complementary approaches where verification is performed on idealized models that abstract away from the specifics of any particular hypervisor, and yet provide a realistic environment in which to investigate the security problems that pertain to the realm of hypervisors.

Contributions

The work presented here initiates such an approach by developing a minimalistic model of a hypervisor, and by formally proving that the hypervisor correctly enforces isolation between guest operating systems, and under mild hypotheses guarantees basic availability properties to guest operating systems. In order to achieve some reasonable level of tractability, our model is significantly simpler than the setting considered in the Microsoft Hyper-V verification project, it abstracts away many specifics of memory management such as shadow page tables (SPTs) and of the underlying hardware and runtime environment such as I/O devices. Instead, our model focuses on the aspects that are most relevant for isolation properties, namely read and write resources on machine addresses, and is sufficiently complete to allow us to reason about isolation properties. Specifically, we show that an operating system can only read and modify memory it owns, and a non-influence property [34] stating that the behavior of an operating system is not influenced by other operating systems. In addition, our model allows reasoning about availability; we prove, under reasonable conditions, that all requests of a guest operating system to the hypervisor are eventually attended, so that no guest operating system waits indefinitely for a pending request. Overall, our verification effort shows that the model is adequate to reason about safety properties (read and write isolation), 2-safety properties (OS isolation), and liveness properties (availability).

Additionally, in this work we present an implementation of a hypervisor in the programming language of Coq, and a proof that it realizes the axiomatic semantics, on an extended memory model with a formalization of the cache and Translation Lookaside Buffer (TLB). Although it remains idealized and far from a realistic hypervisor, the implementation arguably provides a useful mechanism for validating the axiomatic semantics. The implementation is total, in the sense that it computes for every state and action a new state or an error. Thus, soundness is proved with respect to an extended axiomatic semantics in which transitions may lead to errors. An important contribution in this part of the work is a proof that OS isolation remains valid for executions that may trigger errors.

Finally, we show that virtualized platforms are transparent, i.e. a guest operating system cannot distinguish whether it executes alone or together with other guest operating systems on the platform.

1.4.3 Domain name systems

The Domain Name System (DNS) [35, 36] constitutes a distributed database that provides support to a wide variety of network applications such as electronic mail, WWW, and remote login. The database is indexed by domain names. A domain name represents a path in a hierarchical tree structure, which in turn constitutes a domain name space. Each node of this tree is assigned a label, thus, a domain name is built as a sequence of labels separated by a dot, from a particular node up to the root of the tree.

A distinguishing feature of the design of DNS is that the administration of the system can be distributed among several (authoritative) name servers. A zone is a contiguous part of the domain name space that is managed by a set of authoritative name servers. Then, distribution is achieved by delegating part of a zone administration to a set of delegated sub-zones. DNS is a widely used scalable system, but it was not conceived with security concerns in mind, as it was designed to be a public database with no intentions to restrict access to information. Nowadays, a large amount of distributed applications make use of domain names. Confidence on the working of those aplications depends critically on the use of trusted data: fake information inside the system has been shown to lead to unexpected and potentially dangerous problems.

Already in the early 90’s serious security flaws were discovered by Bellovin and eventually reported in [37]. Different types of security issues concerning the working of DNS have been discussed in the literature, see, for instance, [38, 37, 39, 40, 41, 42]. Identified vulnerabilities of DNS make it possible to launch different kinds of attacks, namely: cache poisoning, client flooding, dynamic update vulnerability, information leakage and compromise of the DNS servers authoritative database [43, 37, 44, 45,< 46].

Domain Name System Security Extensions (DNSSEC) [47, 48, 49] is a suite of Internet Engineering Task Force (IETF) specifications for securing information provided by DNS. More specifically, this suite specifies a set of extensions to DNS which are oriented to provide mechanisms that support authentication and integrity of DNS data but not its availability or confidentiality. In particular, the security extensions were designed to protect resolvers from forged DNS data, such as the one generated by DNS cache poisoning, by digitally signing DNS data using public-key cryptography. The keys used to sign the information are authenticated via a chain of trust, starting with a set of verified public keys that belong to the DNS root zone, which is the trusted third party.

The DNSSEC standards were finally released in 2005 and a number of testbeds, pilot deployments, and services have been rolled out in the last few years [50, 51, 52, 53, 54, 55, 56]. In particular, the main objective of the OpenDNSSEC project [55] is to develop an open source software that manages the security of domain names on the Internet.

Contributions

The important and critical role of DNSSEC in software systems and networks makes it a prime target for formal analysis. This work presents the development of a minimalistic specification of a DNSSEC model, and yet provides a realistic setting in which to explore the security issues that pertain to the realm of DNS. The specification puts forward an abstract formulation of the behavior of the protocol and the corresponding security-related events, where security goals, such as the prevention of cache poisoning attacks, can be given a formal treatment. In particular, the formal model provides the grounds needed to formally state and verify security properties concerning the chain of trust generated along the DNSSEC tree.

1.5 Formal language used

The Coq proof assistant [13, 14] is a free open source software that provides a (dependently typed) functional programming language and a reasoning framework based on higher order logic to perform proofs of programs. Coq allows developing mathematical facts. This includes defining objects (sets, lists, functions, programs); making statements (using basic predicates, logical connectives and quantifiers); and finally writing proofs. The Coq environment supports advanced notations, proof search and automation, and modular developments. It also provides program extraction towards languages like Ocaml and Haskell for execution of (certified) algorithms [57]. These features are very useful to formalize and reason about complex specifications and programs.

As examples of its applicability, Coq has been used as a framework for formalizing programming environments and designing special platforms for software verification: Leroy and others developed in Coq a certified optimizing compiler for a large subset of the C programming language [58]; Barthe and others used Coq to develop Certicrypt, an environment of formal proofs for computational cryptography [59]. Also, as mentioned previously, the Gemalto and Trusted Logic companies obtained the level CC EAL 7 of certification for their formalization, developed in Coq, of the security properties of the JavaCard platform.

We developed our specifications in the Calculus of Inductive Constructions (CIC) [60, 61] using Coq. The CIC is a type theory, in brief, a higher order logic in which the individuals are classified into a hierarchy of types. The types work very much as in strongly typed functional programming languages which means that there are basic elementary types, types defined by induction, like sequences and trees, and function types. An inductive type is defined by its constructors and its elements are obtained as finite combinations of these constructors. Data types are called “Sets” in the CIC (in Coq). When the requirement of finiteness is removed we obtain the possibility of defining infinite structures, called coinductive types, like infinite sequences. On top of this, a higher-order logic is available which serves to predicate on the various data types. The interpretation of the propositions is constructive, i.e. a proposition is defined by specifying what a proof of it is and a proposition is true if and only if a proof of it has been constructed. The type of propositions is called Prop .

1.6 Document outline

The remainder of this document is organized as follows. In Section 2 we study and compare several variants of the security model specified by MIDP to access sensitive resources of a device. In Section 3 we present the formalization of an idealized model of a hypervisor. We establish (formally) that the hypervisor ensures strong isolation properties between the different operating systems, and guarantees that requests from guest operating systems are eventually attended. We then develop a certified implementation of a hypervisor —in the programming language of Coq— on an extended memory model with a formalization of the cache and TLB, and we analyze also security properties for the extended model with execution errors. In Section 4 we present a minimalistic specification of a DNSSEC model which provides the grounds needed to formally state and verify security properties concerning the chain of trust of the DNSSEC tree. Finally, the conclusions of the research are formulated in Section 5.

2 Formal analysis of security models for mobile devices

JME technology provides integral mechanisms that guarantee security properties for mobile devices, defining a security model which restricts access to sensitive functions for badly written or maliciously written applications. The architecture of JME is based upon two layers above the virtual machine: the configuration layer and the profile layer. The Connected Limited Device Configuration (CLDC) is a minimum set of class libraries to be used in devices with low processors, limited working memory and capacity to establish low broad band communications. This configuration is complemented with the Mobile Information Device Profile (MIDP) to obtain a run-time environment suitable to mobile devices such as mobile phones, personal digital assistants and pagers. The profile also defines an application life cycle, a security model and APIs that provide the functionality required by mobile applications, such as networking, user interface, push activation, and local persistent storage.

This section builds upon a number of previously published papers [62, 63, 64, 65]. The rest of the section is organized as follows: Section 2.1 presents the MIDP security model; Section 2.2 overviews the formalization of the MIDP 2.0 security model, presents some of its verified properties, and proposes a methodology to refine the specification and obtain an executable prototype. Furthermore, briefly describes a high level formal specification of an access controller for JME-MIDP 2.0 and an algorithm that has been proved correct with respect to that specification. Section 2.3 develops extensions of the formal specification presented in Section 2.2 concerning the changes introduced by MIDP 3.0, and puts forward some weaknesses of the security mechanisms introduced in that (latest) version of the profile. Section 2.4 introduces a framework suitable for defining, analyzing, and comparing the access control policies that can be enforced by (variants of) the security models considered in this work. Finally, Section 2.5 discusses related work and Section 2.6 presents conclusions and future work. The full development is available for download at http://www.fing.edu.uy/inco/grupos/gsi/sources/midp.

2.1 MIDP security model

A security model at the platform level, based on sets of permissions to access the functions of the device, is defined in the first version of MIDP (version 1.0) and refined in the second version (version 2.0). In the third version (version 3.0) a new dimension to the model is introduced: the security at the application level. The security model at the application level is based on authorizations, so that an application can access the resources shared by another application.

In this section we describe the two security levels considered by MIDP in its different versions.

2.1.1 Security at the platform level

In the original MIDP 1.0 specification, any application not installed by the device manufacturer or a service provider runs in a sandbox that prohibits access to security sensitive APIs or functions of the device (e.g. push activation). Although this sandbox security model effectively prevents any rogue application from jeopardizing the security of the device, it is excessively restrictive and does not allow many useful applications to be deployed after issuance.

In contrast, MIDP 2.0 introduces a new security model at the platform level based on the concept of protection domain. A protection domain specifies a set of permissions and the modes in which those permissions can be granted to applications bound to the domain. Each API or function on the device may define permissions in order to prevent it from being used without authorization.

Permissions can be either granted in an unconditional way, and used without requiring any intervention from the user, or in a conditional way, specifying the frequency with which user interaction will be required to renew the permission. More concretely, for each permission a protection domain specifies one of three possible modes; blanket: permission is granted for the whole application life cycle; session: permission is granted until the application is closed; and oneshot: permission is granted for one single use.

The set of permissions effectively granted to an application is determined by the permissions declared in its descriptor, the protection domain it is bound to, and the answers received from the user to previous requests. A permission is granted to an application if it is declared in its application descriptor and either its protection domain unconditionally grants the permission, or the user was previously asked to grant the permission and its authorization remains valid.

The MIDP 2.0 model distinguishes between untrusted and trusted applications. An untrusted application is one whose origin and integrity can not be determined. These applications, also called unsigned, are bound to a protection domain with permissions equivalent to those in a MIDP 1.0 sandbox. On the other hand, a trusted application is identified and verified by means of cryptographic signatures and certificates based on the X.509 Public Key Infrastructure [66]. These signed applications can be bound to more specific and permissive protection domains. Thus, this model enables applications developed by trusted third parties to be downloaded and installed after issuance of the device without compromising its security.

2.1.2 Security at the application level

In MIDP 3.0 applications which are bound to different protection domains may share data, components, and events. The Record Management System (RMS) API specifies methods that make it possible for record stores from one application to be shared with other applications. With the Inter-MIDlet Communication (IMC) protocol an application can access a shareable component from another one running in a different execution environment. Applications that register listeners or register themselves will only be launched in response to events triggered by authorized applications.

By restricting access to shareable resources of an application, such as data, runtime IMC communication and events, the MIDP 3.0 security model introduces a new dimension at the application level. This new dimension introduces the concept of access authorization, which enables an application to individually control access requests from other applications.

An application that intends to restrict access to its shareable resources must declare access authorizations in its application descriptor. There are four different types of access authorization declarations involving one or more of the following application credentials: domain name, vendor name, and signing certificates. These four types apply respectively to: applications bound to a specific domain; applications from a certain vendor with a certified signature; applications from a certain vendor but whithout a certified signature; applications signed under a given certificate.

When an application tries to access the shareable resources of another application, its credentials are compared with those required by the access authorization declaration. If there is a mismatch the application level access is denied.

2.2 Formally verifying security properties of MIDP 2.0

In this section we overview the formalization of the MIDP 2.0 security model presented in [62].

2.2.1 Applications

In MIDP, applications (usually called MIDlets) are packaged and distributed as suites. A suite may contain one or more MIDlets and is distributed as two files, an application descriptor file and an archive file that contains the actual Java classes and resources. A suite that uses protected APIs or functions should declare the corresponding permissions in its descriptor either as required for its correct functioning or as optional. The content of a typical MIDlet descriptor is shown in Figure 1.

MIDlet-Jar-Size: 90210

MIDlet-Name: Organizer

MIDlet-Vendor: Foo Software

MIDlet-Version: 2.1

MIDlet-1: Alarm, alarm.png, organizer.Alarm

MIDlet-2: Agenda, agenda.png, organizer.Agenda

MIDlet-Permissions: javax.microedition.io.Connector.

socket, javax.microedition.io.PushRegistry

MIDlet-Permissions-Opt: javax.microedition.io.

Connector.http

MicroEdition-Configuration: CLDC-1.1

MicroEdition-Profile: MIDP-2.0

MIDlet-Certificate-1-1: MIICgDCCAekCBEH11wYwDQ...

MIDlet-Jar-RSA-SHA1: pYRJ8Qlu5ITBLxcAUzYXDKnmg...

2.2.2 Device state

To reason about the MIDP 2.0 security model most details of the device state may be abstracted; it is sufficient to specify the set of installed suites, the permissions granted or revoked to them and the currently active suite in case there is one. The active suite, and the permissions granted or revoked to it for the session are grouped into a structure within the state.

We define a notion of valid state, through a predicate ( ) on states, that captures essential properties of the platform. For instance, a property states that a MIDlet suite can be installed and bound to a protection domain only if the set of permissions declared as required in its descriptor are a subset of the permissions the domain offers (with or without user authorization) [62].

) on states, that captures essential properties of the platform. For instance, a property states that a MIDlet suite can be installed and bound to a protection domain only if the set of permissions declared as required in its descriptor are a subset of the permissions the domain offers (with or without user authorization) [62].

2.2.3 Events

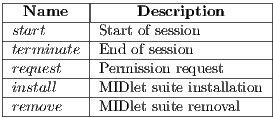

We define a set  for those events that are relevant to our abstraction of the device state (Table 1). The user may be presented with the choice between accepting or refusing an authorization request, specifying the period of time their choice remains valid.

for those events that are relevant to our abstraction of the device state (Table 1). The user may be presented with the choice between accepting or refusing an authorization request, specifying the period of time their choice remains valid.

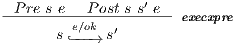

The behaviour of the events is specified by their pre- and postconditions given by the predicates  and

and  respectively. Preconditions are defined in terms of the device state while postconditions are defined in terms of the before and after states and an optional response which is only meaningful for the

respectively. Preconditions are defined in terms of the device state while postconditions are defined in terms of the before and after states and an optional response which is only meaningful for the  event and indicates whether the requested operation is authorized [62].

event and indicates whether the requested operation is authorized [62].

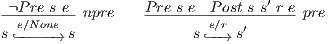

2.2.4 One-step execution

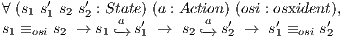

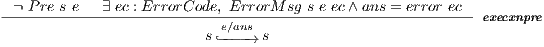

The behavioural specification of the execution of an event is given by the  relation with the following introduction rules:

relation with the following introduction rules:

|

Whenever an event occurs for which the precondition does not hold, the state must remain unchanged. Otherwise, the state may change in such a way that the event postcondition is established. The notation  may be read as “the execution of the event

may be read as “the execution of the event  in state

in state  results in a new state

results in a new state  and produces a response

and produces a response  ”.

”.

2.2.5 Sessions

A session is the period of time spanning from a successful  event to a

event to a  event, in which a single suite remains active. A session for a suite with identifier

event, in which a single suite remains active. A session for a suite with identifier  is determined by an initial state

is determined by an initial state  and a sequence of steps

and a sequence of steps  (

( ) such that the following conditions hold,

) such that the following conditions hold,

;

;  ;

;  ;

;  ;

;  .

.

2.2.6 Verification of security properties

This section is devoted to mention some relevant security properties of the model [1].

We call one-step invariant a property that remains true after the execution of every event if it is true before. We prove that the validity of the device state is a one-step invariant of our specification: for any  and

and  , if

, if  and

and  hold, then

hold, then  also holds.

also holds.

We call session invariant a step property that holds for the rest of a session once it is established in a step. We prove that state validity is a session invariant.

Perhaps a more interesting property is a guarantee of the proper enforcement of revocation. We prove that once a permission is revoked by the user for the rest of a session, any further request for the same permission in the same session is refused [62].

2.2.7 Executable specification

In the formalization described previously it has been specified the behaviour of events implicitly as a binary relation on states instead of explicitly as a state transformer. Moreover, the described formalization is higher-order because, for instance, predicates are used to represent part of the device state and the transition semantics of events is given as a relation on states. The most evident consequence of this choice is that the resulting specification is not executable. What is more, the program extraction mechanism provided by Coq to extract programs from specifications cannot be used in this case. However, had we constructed a more concrete specification at first, we would have had to take arbitrary design decisions from the beginning, unnecessarily restricting the allowable implementations and complicating the verification of properties of the security model.

We show in [62] that it is feasible to obtain an executable specification from our abstract specification. The methodology we propose produces also a proof that the former is a refinement of the latter, thus guaranteeing soundness of the entire process. The methodology is inspired by the work of Spivey [67] on operation and data refinement, and the more comprehensive works of Back and von Wright [68] and Morgan [69] on refinement calculus.

In [63], we developed a high level formal specification of an Access Control module (AC) of JME - MIDP 2.0. In particular, a certified algorithm that satisfies the proposed specification of an AC is described. In this algorithm, when a method invokes a protected function or API the access controller checks that the method has the corresponding permission and that the response from the user, if required, permits the access. If this checkup is successful, the access is granted; otherwise, the action is denied and a security exception is launched.

2.3 Formally verifying security properties of MIDP 3.0

Two important enhancements in MIDP 3.0 (informal) specification are Inter-MIDlet Communication (IMC) and Events. In particular, the IMC protocol enables MIDP 3.0 applications to communicate and collaborate among themselves. MIDP 3.0 provides, in particular, the following capabilities: i) enable and specify proper behavior for MIDlets, such as: allow multiple concurrent MIDlets, and allow inter-MIDlet communications (direct communication, and indirect using events); ii) enable shared libraries for MIDlets; and iii) increase functionality in all areas, including: secure RMS stores, removable/remote RMS stores, IPv6, and multiple network interfaces per device.

In [64], the formalization described in Section 2.2 is extended so as to model security at the application level, introduced in Section 2.1. The extended device state (with allowed and denied access authorizations), a new condition for state validity, and the authorization event (that models the access authorization request from a MIDlet suite to the shared resources of the active suite) are presented. The validity of the security properties already proved for MIDP 2.0, and the new properties related with MIDP 3.0 are examined. On the other hand, an algorithm for the access authorization of applications was developed and its correctness proven. Additionally, certified algorithms have been developed for application installation and communication between applications (IMC protocol) in MIDP 3.0 [70, 71].

Weaknesses of the security mechanisms of MIDP 3.0

MIDP 3.0 allows component-based programming. An application can access a shareable component running in a different execution environment through thin client APIs, like the IMC protocol previously mentioned. The shareable component handles requests from applications following the authorization-based security policy. This policy considers authorization access declarations and the credentials shown by the potential client applications, to grant or deny access.

The platform and application security levels were conceived as independent and complementary frameworks, however the (unsafe) interplay of some of the defined security mechanisms may lead to provoke (unexpected) violations to security policies. In what follows some potential weaknesses of MIDP 3.0 are put forward and a plausible (unsecure) scenario is discussed [1].

In the first place, while at the platform level permissions are defined for each sensitive function of the device, at the application level access authorization declarations do not distinguish between different shareable components from the same MIDlet suite. In this way, once its credentials have been validated, a client application may have access to all the components shared by another application. In the second place, despite of the fact that permissions are granted in various modes ( ,

,  ,

,  ), access authorization is allowed exclusively in a permanent manner. Finally, at the platform level the bounding of an application to a protection domain is based on the X.509 Public Key Infrastructure. At the application level the same infrastructure is used to verify the integrity and authenticity of applications, except for one case (when the vendor name is used as the unique credential needed to grant access authorization).

), access authorization is allowed exclusively in a permanent manner. Finally, at the platform level the bounding of an application to a protection domain is based on the X.509 Public Key Infrastructure. At the application level the same infrastructure is used to verify the integrity and authenticity of applications, except for one case (when the vendor name is used as the unique credential needed to grant access authorization).

Considering the previously established elements, the following scenario is feasible. Let  be an application which is bound to a certain protection domain and having a shareable component. The shareable component uses a sensitive function

be an application which is bound to a certain protection domain and having a shareable component. The shareable component uses a sensitive function  of the device (granted by the protection domain) in order to implement its service. This application

of the device (granted by the protection domain) in order to implement its service. This application  declares access authorizations for unsigned applications from certain vendor

declares access authorizations for unsigned applications from certain vendor  . Let

. Let  be an unsigned application which is bound to a different protection domain and that has been denied permission to access the sensitive function

be an unsigned application which is bound to a different protection domain and that has been denied permission to access the sensitive function  . Now, if the application

. Now, if the application  is capable of providing just the vendor name

is capable of providing just the vendor name  as credential, it would be granted permanent access to the shareable component. Thereby,

as credential, it would be granted permanent access to the shareable component. Thereby,  shall be able to access the sensitive function

shall be able to access the sensitive function  of the device. This is a clear example of an unwanted behavior, where a sensitive function is accessed by an application without its permission.

of the device. This is a clear example of an unwanted behavior, where a sensitive function is accessed by an application without its permission.

Two observations are drawn from the previous scenario. On the one hand, a stronger access authorization declaration is necessary. If declarations based only on the vendor name are left aside, all the remaining ones demand the integrity and authenticity of signatures and certificates. This will result in a more reliable security model. On the other hand, to avoid circumventing the security at the platform level two aspects should be considered. The first one is to declare the permissions of the protection domain exposed by a shareable component. The second aspect is to extend the security policy by conceding those permissions to sensitive functions when being accessed by applications through shareable resources.

2.4 A framework for defining and comparing access control policies

In [29], a security model for interactive mobile devices is put forward which can be grasped as an extension of that of MIDP. The work presented in this section, based on [65, 1], has focused on developing a formal model for studying, in particular, interactive user querying mechanisms for permission granting for application execution on mobile devices. Like in the MIDP case, the notion of permission is central to this model. A generalisation of the one-shot permission described above is proposed that consists in associating a multiplicity to a permission, which states how many times that permission can be used.

The proposed model has two basic constructs for manipulating permissions:  and

and  . The grant construct models the interactive querying of the user, asking whether he grants a particular permission with a certain multiplicity. The consume construct models the access to a sensitive function which is protected by the security police, and therefore requires (consumes) permissions.

. The grant construct models the interactive querying of the user, asking whether he grants a particular permission with a certain multiplicity. The consume construct models the access to a sensitive function which is protected by the security police, and therefore requires (consumes) permissions.

A semantics of the model constructs is proposed as well as a logic for reasoning on properties of the execution flow of programs using those constructs. The basic security property the logic allows one to prove is that a program will never attempt to access a resource for which it does not have a permission. The authors also provide a static analysis that makes it possible to verify that a particular combination of the grant-consume constructs does not violate that security property. For developing that kind of analysis the constructs are integrated into a program model based on control-flow graphs. This model has also been used in previous work on modelling access control for Java, see for instance [72, 73].

One of the objectives of the work reported in [65, 1], has been to build a framework which would provide a formal setting to define the permission models defined by MIDP and the one presented in [29] (and variants of it) in an uniform way and to perform a formal analysis and comparison of those models. This framework, which is formally defined using the CIC, adopts, with variations, most of the security and programming constructions defined in [29]. In particular it has been modified so as to be parameterized by permission granting policies, while in the original work this relation is fixed.

2.5 Related work

Some effort has been put into the evaluation of the security model for MIDP 2.0; Kolsi and Virtanen [74] and Debbabi et al. [75] analyze the application security model, spot vulnerabilities in various implementations and suggest improvements to the specification. Although these works report on the detection of security holes, they do not intend to prove their absence. The formalizations we overview in this article, however, provide a formal basis for the verification of the model and the understanding of its intricacies.

Various articles analyze access control in Java and C , see for instance [73, 76, 72, 77]. All these works have mainly focused on the stack inspection mechanism and the notion of granting permissions to code through privileged method calls. The access control check procedures in MIDP do not involve a stack walk. While in the case of the JSE platform there exists a high-level specification of the access controller module (the basic mechanism is based on stack inspection [28]), no equivalent specification exists for JME. In this work, we illustrate the pertinence of the specification of the MIDP (version 2.0) security model that has been developed by specifying and proving the correctness of an access control module for the JME platform.

, see for instance [73, 76, 72, 77]. All these works have mainly focused on the stack inspection mechanism and the notion of granting permissions to code through privileged method calls. The access control check procedures in MIDP do not involve a stack walk. While in the case of the JSE platform there exists a high-level specification of the access controller module (the basic mechanism is based on stack inspection [28]), no equivalent specification exists for JME. In this work, we illustrate the pertinence of the specification of the MIDP (version 2.0) security model that has been developed by specifying and proving the correctness of an access control module for the JME platform.

Besson, Duffay and Jensen [29, 78] have put forward an alternative access control model for mobile devices that generalizes the MIDP model by introducing permissions with multiplicites, extending the way permissions are granted by users and consumed by applications. One of the main outcomes of the work we report in the present paper, based on [65, 1], is a general framework that sets up the basis for defining, analyzing and comparing access control models for mobile devices. In particular, this framework subsumes the security model of MIDP and several variations, including the model defined in [29, 78].

Android [25] is an open platform for mobile devices developed by the Open Handset Alliance led by Google, Inc. Focusing on security, Android combines two levels, Linux system and application framework level, of enforcement [26, 27]. At the Linux system level, Android is a multi-process system. The Android security model resembles a multi-user server, rather than the sandbox model found on JME platform. At the application framework level, Android provides fine control through Inter-Component Communication reference monitor, that provides Mandatory Access Control enforcement on how applications access the components. There have been several analysis done on the security of the Android system, but few of them pay attention to the formal aspect of the permission enforcing framework. In [79], the authors propose an entity-relationship model for the Android permission scheme [80]. This work —the first formalization of the permission scheme which is enforced by the Android framework— builds a state-based formal model and provides a behavioral specification, based in turn on the specification developed in [62]. The abstract operation set considered in [79] does not include permission request/revoke operations presents in MIDP. In [81], Chaudhuri presents a core language to describe Android applications, and to reason about their dataflow security properties. The paper introduces a type system for security in this language. The system exploits the access control mechanisms already provided by Android. Furthermore, [26] reports a logic-based tool, Kirin, for determining whether the permissions declared by an application satisfy a certain safety invariant. A formal comparison between both JME-MIDP and Android security models is an interesting further work. However, we develop a first informal analysis in the technical report [82], which also details the Android security model and analyzes some of the more recent works that formalize different aspects of this model [83].

Language-based access control has been investigated for some idealised program models, see e.g. [84, 85, 86]. These works make use of static analysis for verifying that resources are accessed according to access control policies specified and that no security violations will occur at run-time. They do not study, though, specific language primitives for implementing particular access control models.

2.6 Summary and future work

We have provided the first verifiable formalization of the MIDP 2.0 security model, according to [62], and have also constructed the proofs of several important properties that should be satisfied by any implementation that fulfills its specification. Our formalization is detailed enough to study how other mechanisms interact with the security model, for instance, the interference between the security rules that control access to the device resources, and mechanisms such as application installation. We have also proposed a refinement methodology that might be used to obtain a sound executable prototype of the security model.

Moreover, a high-level formalization of the JME-MIDP 2.0 Access Control module also has been developed. This specification assumes that the security policy of the device is static, that there exists at most one active suite in every state of the device, and that all the methods of a suite share the same protection domain. The obtained model, however, can be easily extended so as to consider multiple active suites as well as to specify a finer relation allowing to express that a method is bound to a protection domain, and then that two different methods of the same suite may be bound to different protection domains. With the objective of obtaining a certified executable algorithm of the access controller, the high-level specification has been refined into a executable equivalent one, and an algorithm has been constructed that is proved to satisfy that latter specification. The formal specification and the obtained derived code of the algorithm contribute to the understanding of the working of such an important component of the security model of that platform.

Additionally, it has also been presented an extension of the formalization of MIDP 2.0 security model which considers the changes introduced in version 3.0 of MIDP. In particular, a new dimension of security is represented: the security at the application level. This extended specification preserves the security properties verified for MIDP 2.0 and enables the research of new security properties for MIDP 3.0. In this way, the formalization is updated keeping its validity as a useful tool to analyze the security model of MIDP at both, the platform and the application level. Some weaknesses introduced by the informal specification of version of MIDP 3.0 are also discussed, in particular those regarding the interplaying of the mechanisms for enforcing security at the application and at the platform level. They reflect potential weaknesses of implementations which satisfy the informal specification [24].

Finally, we have also built a framework that provides a uniform setting to define and formally analyze access control models which incorporate interactive permission requesting/granting mechanisms. In particular, the work presented here has focused on two distinguished permission models: the one defined by version 2.0 (and 3.0) of MIDP and the one defined by Besson et al. in [29]. A characterization of both models in terms of a formal definition of grant policy has also been provided [1]. Another kind of permission policies can also be expressed in the framework. In particular, it can be adapted to introduce a notion of permission revocation, a permission mode not considered in MIDP. A revoke can be modeled in the permission overwriting approach, for instance, by assigning a zero multiplicity to a resource type. In the accumulative approach, revocation might be modeled using negative multiplicities. To introduce revocations, in turn, enables, without further changes to the framework, to model a notion of permission scope. One such scope would be grasped as the session interval delimited by an activation and a revocation of that permission.

The formal development is about 20kLOC of Coq.

Future work is the study and specification, using the formal setting provided by the framework, of algorithms for enforcing the security policies derived from different sort of permission models to control the access to sensitive resources of the devices. Moreover, one main objective is to extend the framework so as to be able to construct certified prototypes from the formal definitions of those algorithms. Finally, an exhaustive formal comparison between both JME-MIDP and Android security models is proposed as further work. We have begun developing a formal specification of the Android security model in Coq, considering [82, 87, 88, 89, 90, 91, 92], which focuses on the analysis of the permission system in general, and in the scheme of permission re-delegation, in particular [93].

3 Formally verifying security properties in an idealized model of virtualization

3.1 Introduction

Virtualization is a prominent technology that allows high-integrity, safety-critical, systems and untrusted, non-critical, systems to coexist securely on the same platform and efficiently share its resources. To achieve the strong security guarantees requested by these application scenarios, virtualization platforms impose a strict control on the interactions between their guest systems. While this control theoretically guarantees isolation between guest systems, implementation errors and side-channels often lead to breaches of confidentiality. This allows a malicious guest system to obtain secret information, such as a cryptographic key, about another guest system.

Over the last few years, there have been significant efforts to prove that virtualization platforms deliver the expected, strong, isolation properties between operating systems. The most prominent efforts in this direction are within the Hyper-V [15, 16] and L4.verified [17] projects, which aim to derive strong guarantees for concrete implementations: more specifically, Murray et al [33] recently presented a machine-checked information flow security proof for the seL4 microkernel. In comparison with the Hyper-V and L4.verified projects, our proofs are based on an axiomatization of the semantics of a hypervisor, and abstract away many details from the implementation; on the other hand, our model integrates caches and Translation Lookaside Buffers (TLBs), two security relevant components that are not considered in these works.

There are three important isolation properties for virtualization platforms. On the one hand, read and write isolation respectively state that guest operating systems cannot read and write on memory that they do not own. On the other hand, OS isolation states that the behavior of a guest operating system does not depend on the previous behavior and does not affect the future behavior of other operating systems. In contrast to read and write isolation, which are safety properties and can be proved with deductive verification methods, OS isolation is a 2-safety property [18, 19] that requires reasoning about two program executions. Unfortunately, the technology for verifying 2-safety properties is not fully mature, making their formal verification on large and complex programs exceedingly challenging.

3.1.1 A primer on virtualization

This section provides a primer on virtualization, focusing on the elements that are most relevant for our formal model.

Virtualization is a technique used to run on the same physical machine multiple operating systems, called guest operating systems. The hypervisor, or Virtual Machine Monitor [94], is a thin layer of software that manages the shared resources (e.g. CPU, system memory, I/O devices). It allows guest operating systems to access these resources by providing them an abstraction of the physical machine on which they run. One of the most important features of a virtualization platform is that its OSs run isolated from one another. In order to guarantee isolation and to keep control of the platform, a hypervisor makes use of the different execution modes of a modern CPU: the hypervisor itself and trusted guest OSs run in supervisor mode, in which all CPU instructions are available; while untrusted guest operating systems will run in user mode in which privileged instructions cannot be executed.

Historically there have been two different styles of virtualization: full virtualization and paravirtualization. In the first one, each virtual machine is an exact duplicate of the underlying hardware, making it possible to run unmodified operating systems on top of it. When an attempt to execute a privileged instruction by the OS is detected the hardware raises a trap that is captured by the hypervisor and then it emulates the instruction behavior. In the paravirtualization approach, each virtual machine is a simplified version of the physical architecture. The guest (untrusted) operating systems must then be modified to run in user CPU mode, changing privileged instructions to hypercalls, i.e. calls to the hypervisor. A hypercall interface allows OSs to perform a synchronous software trap into the hypervisor to perform a privileged operation, analogous to the use of system calls in conventional operating systems. An example use of a hypercall is to request a set of page table updates, in which the hypervisor validates and applies a list of updates, returning control to the calling OS when this is completed.

In this work, we focus on the memory management policy of a paravirtualization style hypervisor, based on the Xen virtualization platform [95]. Several features of the platform are not yet modeled (e.g. I/O devices, interruption system, or the possibility to execute on multi-cores), and are left as future work.

3.1.2 Contents of the rest of the section

This section builds upon the previously published papers [96, 97]. In Section 3.2 we briefly present our formal model. Isolation properties are considered in Section 3.3, whereas availability is discussed in Section 3.4. Section 3.5 presents an extension of the memory model with cache and TLB. Section 3.6 describes the executable semantics of the hypervisor. In Section 3.7 we discuss the isolation theorems for the extended model with execution errors and a proof of transparency. Finally, Section 3.8 considers related work and Section 3.9 summarizes the conclusions and future work. The formal development is available at http://www.fing.edu.uy/inco/grupos/gsi/proyectos/virtualcert.php.

3.2 The model

In this section we briefly present and discuss some aspects of the formal specification of the idealized model. We first introduce the set of states, and the set of actions; the latter include both operations of the hypervisor and of the guest operating systems. The semantics of each action is specified by a precondition and a postcondition. Then, we introduce a notion of valid state and show that state validity is preserved by execution. Finally, we define execution traces.

3.2.1 Informal overview of the memory model

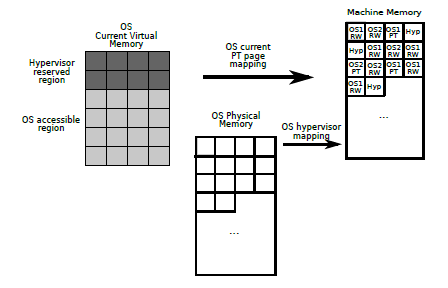

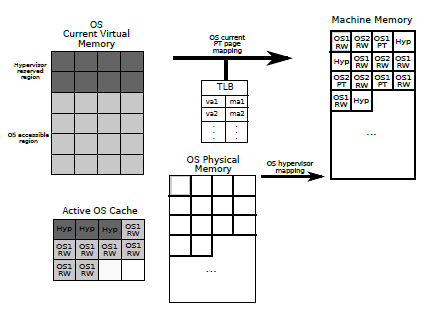

The most important component of the state is the memory model, which we proceed to describe. As illustrated in Figure 2, the memory model involves three types of addressing modes and two address mappings: the machine address is the real machine memory; the physical memory is used by the guest OS, and the virtual memory is used by the applications running on an operating system.

The virtual memory is the one used by applications running on OSs. Each OS stores a partial mapping of virtual addresses to machine addresses. This will allow us to represent the translation of the virtual addresses of the applications executing in the OS into real hardware addresses. Moreover, each OS has a designated portion of its virtual address space (usually abbreviated VAS) that is reserved for the hypervisor to attend hypercalls. We say that a virtual address  is accessible by the OS if it belongs to the virtual address space of the OS which is not reserved for the hypervisor. We denote the type of virtual addresses by

is accessible by the OS if it belongs to the virtual address space of the OS which is not reserved for the hypervisor. We denote the type of virtual addresses by  .

.

The physical memory is the one addressed by the kernel of the guest OS. In the Xen [95] platform, this is the type of addresses that the hypervisor exposes to the domains (the untrusted guest OSs in our model). The type of physical addresses is written  .

.

The machine memory is the real machine memory. A mechanism of page classification was introduced in order to cover concepts from certain virtualization platforms, in particular Xen. The model considers that each machine address that appears in a memory mapping corresponds to a memory page. Each page has at most one unique owner, a particular OS or the hypervisor, and is classified either as a data page with read/write (RW) access or as a page table, where the mappings between virtual and machine addresses reside. It is required to register (and classify) a page before being able to use or map it. The type of machine addresses is written  .

.

As to the mappings, each OS has an associated collection of page tables (one for each application executing on the OS) that map virtual addresses into machine addresses. When executed, the applications use virtual addresses, therefore on context switch the current page table of the OS must change so that the currently executing application may be able to refer to its own address space. Neither applications nor untrusted OSs have permission to read or write page tables, because these actions can only be performed in supervisor mode. Every memory address accessed by an OS needs to be associated to a virtual address. The model must guarantee the correctness of those mappings, namely, that every machine address mapped in a page table of an OS is owned by it.

The mapping that associates, for each OS, machine addresses to physical ones is, in our model, maintained by the hypervisor. This mapping might be treated differently by each specific virtualization platform. There are platforms in which this mapping is public and the OS is allowed to manage machine addresses. The physical-to-machine address mapping is modified by the actions  and

and  [1].

[1].

3.2.2 Formalizing states

The states of the platform are modeled by a record ( ) with six components: the component

) with six components: the component  indicates which is the active operating system, and the components

indicates which is the active operating system, and the components  and

and  the corresponding execution and processor mode.

the corresponding execution and processor mode.  stores the information of the guest operating systems of the platform. Finally, the components

stores the information of the guest operating systems of the platform. Finally, the components  and

and  are the mappings used to formalize the memory model described previously.

are the mappings used to formalize the memory model described previously.

We define a notion of valid state ( ) that captures essential properties of the platform. For instance, two properties states that: all page tables of an OS

) that captures essential properties of the platform. For instance, two properties states that: all page tables of an OS  map accessible virtual addresses to pages owned by

map accessible virtual addresses to pages owned by  and not accessible ones to pages owned by the hypervisor; and any machine address

and not accessible ones to pages owned by the hypervisor; and any machine address  which is associated to a virtual address in a page table has a corresponding pre-image, which is a physical address, in the hypervisor mapping.

which is associated to a virtual address in a page table has a corresponding pre-image, which is a physical address, in the hypervisor mapping.

3.2.3 Actions and executions

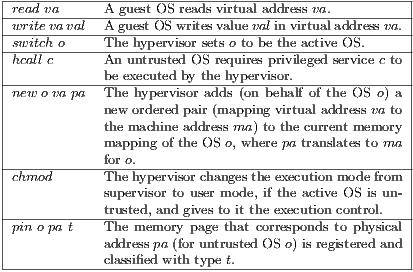

Table 2 summarises a small subset –due to space restrictions– of the actions specified in the model [1], and their effects. Actions can be classified as follows:

- hypervisor calls

,

,  ,

,  ,

,  and

and  ;

; - change of the active OS by the hypervisor (

);

); - access, from an OS or the hypervisor, to memory pages (

and

and  );

); - update of page tables by the hypervisor on demand of an untrusted OS or by a trusted OS directly (

and

and  );

); - changes of the execution mode (

,

,  );

); - changes in the hypervisor memory mapping (

and

and  ), which are performed by the hypervisor on demand of an untrusted OS or by a trusted OS directly. These actions model (de)allocation of resources.

), which are performed by the hypervisor on demand of an untrusted OS or by a trusted OS directly. These actions model (de)allocation of resources.

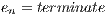

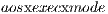

The behaviour of actions is specified by a precondition  and by a postcondition

and by a postcondition  . The execution of an action is specified by the

. The execution of an action is specified by the  relation:

relation:

|

Whenever an action occurs for which the precondition holds, the (valid) state may change in such a way that the action postcondition is established. The notation  may be read as the execution of the action

may be read as the execution of the action  in a valid state

in a valid state  results in a new state

results in a new state  .

.

One-step execution preserves valid states. Platform state invariants, such as state validity, are useful to analyze other relevant properties of the model. In particular, the results presented in this work are obtained from valid states of the platform.

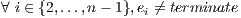

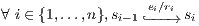

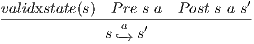

Isolation properties are eventually expressed on execution traces, rather than execution steps; likewise, availability properties are formalized as fairness properties stating that something good will eventually happen in an execution traces. Thus, our formalization includes a definition of execution traces and proof principles to reason about them. Informally, an execution trace is defined as a stream (an infinite list) of states that are related by the transition relation  , i.e. an object of the form

, i.e. an object of the form

|

such that every execution step  is valid.

is valid.

3.3 Isolation properties

We formally establish that the hypervisor enforces strong isolation properties: an operating system can only read and modify memory that it owns, and its behavior is independent of the state of other operating systems. The properties are established for a single step of execution, and then extended to traces.

3.3.1 Read isolation

Read isolation captures the intuition that no OS can read memory that does not belong to it. Formally, read isolation states that the execution of a  action requires that

action requires that  is mapped to a machine address

is mapped to a machine address  that belongs to the active OS current memory mapping, and that is owned by the active OS.

that belongs to the active OS current memory mapping, and that is owned by the active OS.

3.3.2 Write Isolation

Write isolation captures the intuition that an OS cannot modify memory that it does not own. Formally, write isolation states that, unless the hypervisor is running, the execution of any action will at most modify memory pages owned by the active OS or it will allocate a new page for that OS.

3.3.3 OS Isolation

OS isolation is a 2-safety property [18, 19], cast in terms of two executions of the system, and is closely related to the non-influence property studied by Oheimb and co-workers [34, 98]. OS isolation captures the intuition that the behavior of any OS does not depend on other OSs states, and is expressed using the notion of equivalence between states w.r.t. an operating system  (

( ). For instance, two conditions which are part of this relation of equivalence are: all page table mappings of

). For instance, two conditions which are part of this relation of equivalence are: all page table mappings of  that maps a virtual address to a RW page in one state, must map that address to a page with the same content in the other; and the hypervisor mappings of

that maps a virtual address to a RW page in one state, must map that address to a page with the same content in the other; and the hypervisor mappings of  in both states are such that if a given physical address maps to some RW page, it must map to a page with the same content on the other state. Formally, OS isolation states that